Thanks Apple, Welcome Vision Pro! (ft. Spatial Computing & Spatial Audio)

Thanks Apple, Welcome Vision Pro! (ft. Spatial Computing & Spatial Audio)

(Writer: Henney Oh)

ONE MORE THING!

At the WWDC in June 2023, Apple grandly showcased their “One More Thing,” a device named Vision Pro, breaking new ground in the realm of Spatial Computing. In typical Apple fashion, they opted to categorize it as a “Spatial Computing” device, eschewing labels like ‘VR HMD’ or ‘AR glasses.’

Gaudio Lab made its first steps into the VR market in 2014, identifying itself and its target market as The Spatial Audio Company for VR. As a result, one of the most frequently asked question has been: “When do you anticipate the VR market will surge?” In response, I cleverly, or perhaps coyly, have always stated, “The day Apple launches a VR device.” 😎

And finally, that day has arrived, precisely a decade later.

(Apple has announced that Vision Pro will debut in Spring 2024)

In the Apple keynote at this year’s WWDC, a major portion of the device introduction session was devoted to explaining the Spatial Audio incorporated into Vision Pro. Apple has consistently invested significant effort into audio – a feature often unnoticed but nonetheless influential to the user experience. It’s worth remembering that the device that catalyzed Apple’s rise was an audio device, the iPod.

[Image: Vision Pro’s Dual Driver Audio Pods (speakers) featuring built-in Spatial Audio]

Spatial Audio, NICE TO HAVE → MUST HAVE

Apple first debuted its Spatial Audio technology in the AirPods Pro back in 2020. At that time, I hypothesized: “This Spatial Audio represents a strategic primer for the VR/AR device Apple will release in the future.”

While the Spatial Audio experience on a smartphone (or a TV) – essentially viewing a 2D screen within a small window – can be deemed as nice-to-have (useful, but not essential), it becomes a must-have within a VR environment. For instance, in a virtual scenario, the sound of a dog barking behind me shouldn’t seem as if it’s coming from the front.

In a previous post (link), I explained that terms like VR Audio, Immersive Audio, 3D Audio, and Spatial Audio may vary, but their fundamental meaning is essentially the same. They all refer to the technologies that create and reproduce three-dimensional sound.

Is it merely conjecture to suggest that Apple, preparing to launch a Spatial Computing Device in 2024, had already termed their 3D audio technology ‘Spatial Audio’ when it was integrated into the AirPods five years prior?”

Mono → Stereo → Spatial : Transformations in Sound Perception

To begin with, human beings perceive sounds in three dimensions in their immediate environment. We can discern whether the sound of a nearby colleague typing comes from our left or from a position behind and beneath us. This is made possible through the sophisticated interplay of our auditory system and the brain’s binaural hearing skills, employing just two sensors – our ears. As a result, the ideal scenario for all sound played back through speakers or headphones would be a three-dimensional reproduction.

However, due to limitations of devices such as speakers and headphones/earphones, and constraints of communication and storage technologies, we have been conditioned to store, transmit, and reproduce sounds in a 2D (stereo) or 1D (mono) format.

Think of a lecture hall where a speaker’s voice is broadcast through speakers mounted on the ceiling. Even when there’s a noticeable discrepancy between visual cues and the sound’s origin, we adapt without considering the situation strange. This holds true in large concert venues, where sound reaches thousands of audience members through wall-mounted speakers, not directly from the performers onstage. Our adaptability and capacity to learn have enabled us to become comfortable with this mode of sound delivery, and over time, we’ve even developed a learned preference for it.

An apt example can be found in the Atmos Mix, a type of spatial sound format. It is often criticized for sounding inferior to the traditional Stereo Mix. The market standard for an extended period, stereo has been used predominantly in studio recordings, leading us to become accustomed to it. However, reflecting on the past, there was significant resistance from both artists and users during the transition from mono to stereo. This suggests that a future where we become more familiar and comfortable with the Spatial Audio Mix is possible.

Apple’s Commitment to Perfecting Spatial Sound : The Vision Pro

Using Vision Pro can simulate an experience reminiscent of a remote meeting on Star Trek’s Holodeck, making it seem as though the other participant is physically present in the room with you. This could represent the pinnacle of a “Being There” or “Being Here” experience. To facilitate this, Spatial Audio is indispensable. Our brains need to perceive the sound as emanating from the individual in front of us, as if they were truly present, in order to trigger a place illusion. As we turn our heads, the apparent location of the sound must adjust accordingly. Spatial Audio for headphones, fundamentally rooted in *Binaural Rendering, provides exactly this function.

To understand more about Binaural Rendering and its applications...,

Spatial Computing Devices, such as Vision Pro, which encompass both VR and AR technologies, are fundamentally personal display systems. These devices are designed to provide an immersive, individualized visual experience. As such, it is inherent in their design to use headphones for sound reproduction instead of speakers. Binaural rendering is the foundational technology that enables the realization of spatial audio through headphones. The term “binaural” originates from Latin, literally meaning “having two ears”. Humans, equipped with just two ears, can perceive the directionality of sounds – front, back, left, right, above, and below. This is made possible by the diffraction and acoustic shadow phenomena of sounds as they enter our ear canal and resonate throughout our bodies. Binaural rendering is a technology that simulates this natural mechanism and replicates it through headphones, enabling the positioning of sounds within a three-dimensional space.

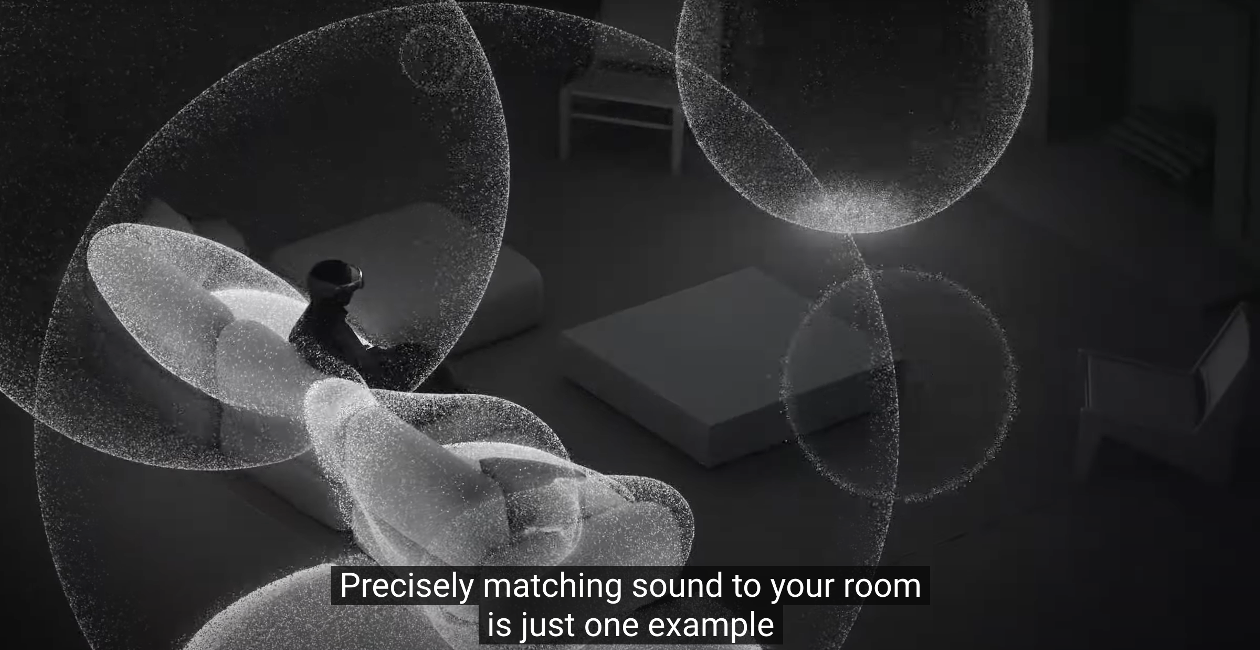

To convincingly simulate sounds as if they are emanating from within the physical environment, it’s necessary to understand and model the paths that these sounds would traverse to reach our ears in real-world scenarios (sound characteristics change as they interact with various objects, such as walls, sofas, and ceilings). Vision Pro reportedly incorporates Audio Ray Tracing technology to achieve this goal. Although this requires extensive computational power, it is testament to the capabilities of Apple’s silicon (M2 & R1) and underlines Apple’s commitment to perfecting spatial audio.

[Image: Audio Ray Tracing - Screen capture from the Vision Pro Keynote at WWDC 2023]

Thanks Apple, Welcome Vision Pro!

Gaudio Lab has been a pioneer in the spatial audio field, launching a comprehensive suite of innovative tools as early as 2016 and 2017. The suite includes: Works (a tool that allows sound engineers to effortlessly edit and master spatial audio for VR 360 videos within existing sound creation environments like Pro Tools), Craft (a tool that enables the integration of spatial audio into VR content created using game engines such as Unity or Unreal), and Sol (a binaural rendering SDK that enhances head-tracking information on devices like HMDs and smartphones to provide real-time spatial audio experiences).

[Image: VR Audio = Gaudio Lab Keynote]

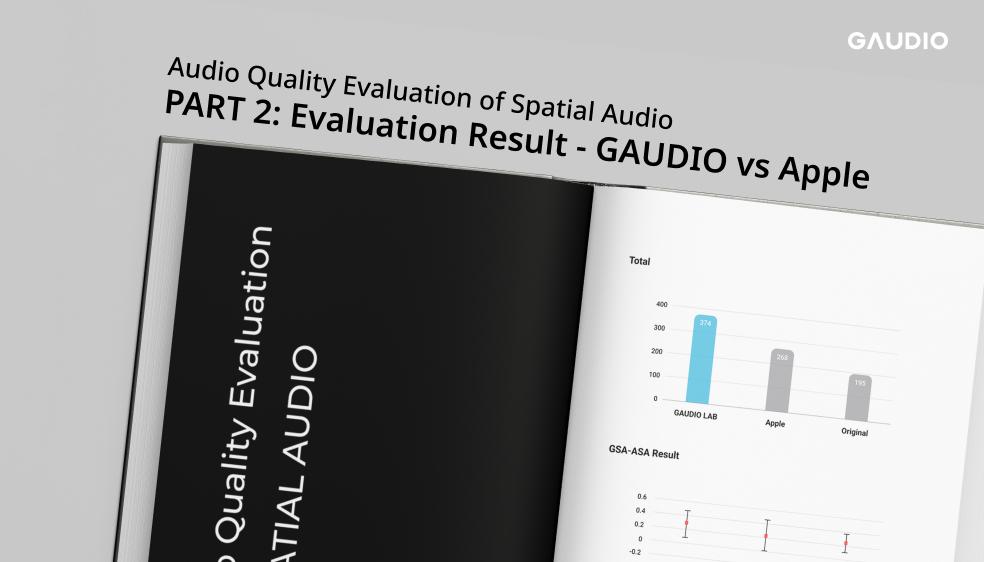

Post-2018, the VR market experienced a significant downturn, leading to the closure of many tech firms in this domain. However, the team at Gaudio Lab has navigated these turbulent times with resilience, pivoting our technology to suit existing markets and products while refining our techniques. Among our noteworthy accomplishments are:

- BTRS (the successor to Works), which enables a fully immersive spatial audio experience using standard headphones in a smartphone or 2D screen live-streaming environment.

- GSA (the successor to Sol), provides a spatial audio experience even when listening to ordinary stereo signals on devices such as earbuds and headphones.

Gaudio Lab’s laboratory is overflowing with a variety of spatial audio products and cutting-edge technologies, living up to its reputation as ‘The Original Spatial Audio Company.’ These include spatial audio for in-car environments, stereo speakers, sound bars, and cinematic settings.

Furthermore, at the upcoming AES 2023 International Conference on Spatial and Immersive Audio (August 23-25, 2023, University of Huddersfield, UK), Gaudio Lab will be presenting a paper on our latest research, titled ‘Room Impulse Response Estimation in a Multiple Source Environment.’

This research centers on innovative AI technology that amplifies immersion by autonomously recognizing and extracting a space’s acoustic characteristics from existing ambient sounds, such as conversational voices. This bypasses the need for distinct equipment such as Apple’s Audio Ray Tracing.

The advent of spatial audio in 2D screen environments like smartphones, TVs, and cinemas is just the tip of the iceberg. The anticipation is indeed tangible as we await the dawn of the Spatial Computing era, where Gaudio Lab’s extensive spatial audio technologies can truly come into their own.

We’ve waited long enough. Thanks, Apple. Welcome, Vision Pro!